What Sci-Fi Tells Interaction Designers About Gestural Interfaces

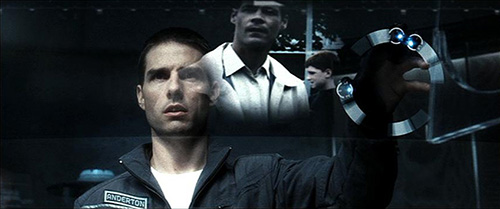

One of the most famous interfaces in sci-fi is gestural — the precog scrubber interface used by the Precrime police force in Minority Report. Using this interface, Detective John Anderton uses gestures to “scrub” through the video-like precognitive visions of psychic triplets. After observing a future crime, Anderton rushes to the scene to prevent it and arrest the would-be perpetrator.

This interface is one of the most memorable things in a movie that is crowded with future technologies, and it is one of the most referenced interfaces in cinematic history. (In a quick and highly unscientific test, at the time of writing, we typed [sci-fi movie title] + “interface” into Google for each of the movies in the survey and compared the number of results. “Minority Report interface” returned 459,000 hits on Google, more than six times as many as the runner-up, which was “Star Trek interface” at 68,800.)

It’s fair to say that, to the layperson, the Minority Report interface is synonymous with “gestural interface.” The primary consultant to the filmmakers, John Underkoffler, had developed these ideas of gestural control and spatial interfaces through his company, Oblong, even before he consulted on the film. The real-world version is a general-purpose platform for multiuser collaboration. It’s available commercially through his company at nearly the same state of the art as portrayed in the film.

Though this article references Minority Report a number of times, two lessons are worth mentioning up front.

Figures 5.6a–b: Minority Report (2002)

Lesson: A Great Demo Can Hide Many Flaws.

Hollywood rumor has it that Tom Cruise, the actor playing John Anderton, needed continual breaks while shooting the scenes with the interface because it was exhausting. Few people can hold their hands above the level of their heart and move them around for any extended period. But these rests don’t appear in the film — a misleading omission for anyone who wants to use a similar interface for real tasks.

Although a film is not trying to be exhaustively detailed or to accurately portray a technology for sale, demos of real technologies often suffer the same challenge. The usability of the interface, and in this example its gestural language, can be a misleading though highly effective tool to sell a solution, because it doesn’t need to demonstrate every use exhaustively.

Lesson: A Gestural Interface Should Understand Intent.

The second lesson comes from a scene in which Agent Danny Witwer enters the scrubbing room where Anderton is working and introduces himself while extending his hand. Being polite, Anderton reaches out to shake Witwer’s hand. The computer interprets Anderton’s change of hand position as a command, and Anderton watches as his work slides off of the screen and is nearly lost. He then disregards the handshake to take control of the interface again and continue his work.

Figures 5.7a–d: Minority Report (2002)

One of the main problems with gestural interfaces is that the user’s body is the control mechanism, but the user intends to control the interface only part of the time. At other times, the user might be reaching out to shake someone’s hand, answer the phone or scratch an itch. The system must accommodate different modes: when the user’s gestures have meaning and when they don’t. This could be as simple as an on/off toggle switch somewhere, but the user would still have to reach to flip it.

Perhaps a pause command could be spoken, or a specific gesture reserved for such a command. Perhaps the system could watch the direction of the user’s eyes and regard the gestures made only when he or she is looking at the screen. Whatever the solution, the signal would be best in some other “channel” so that this shift of intentional modality can happen smoothly and quickly without the risk of issuing an unintended command.

Gesture Is A Concept That Is Still Maturing.

What about other gestural interfaces? What do we see when we look at them? A handful of other examples of gestural interfaces are in the survey, dating as far back as 1951, but the bulk of them appear after 1998 (Figure 5.8).

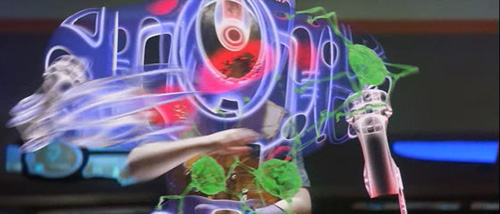

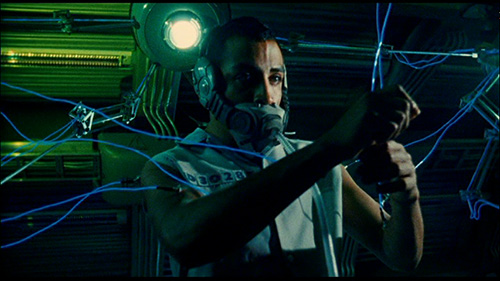

Figure 5.8a–d: Chrysalis (2007); Lost in Space (1998); The Matrix Reloaded (2003); Sleep Dealer (2008)

Looking at this group, we see an input technology whose role is still maturing in sci-fi. A lot of variation is apparent, with only a few core similarities among them. Of course, these systems are used for a variety of purposes, including security, telesurgery, telecombat, hardware design, military intelligence operations and even offshored manual labor.

Most of the interfaces let their users interact with no additional hardware, but the Minority Report interface requires its users to don gloves with lights at the fingertips, as does the telesurgical interface in Chrysalis (see Figure 5.8a). We imagine that this was partially for visual appeal, but it certainly would make tracking the exact positions of the fingers easier for the computer.

Hollywood’s Pidgin

Although none of the properties in the survey takes pains to explain exactly what each gesture in a complex chain of gestural commands means, we can look at the cause and effect of what is shown on screen and piece together a basic gestural vocabulary. Only seven gestures are common across properties in the survey.

1. Wave To Activate

The first gesture is waving to activate a technology, as if to wake it up or gain its attention. To activate his spaceship’s interfaces in The Day the Earth Stood Still, Klaatu passes a flat hand above their translucent controls. In another example, Johnny Mnemonic waves to turn on a faucet in a bathroom, years before it became common in the real world (Figure 5.9).

Figure 5.9a–c: Johnny Mnemonic (1995)

2. Push To Move

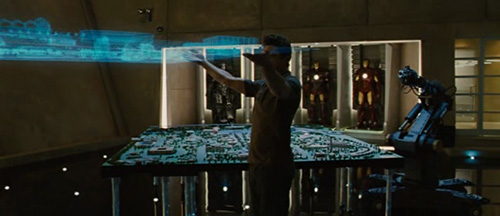

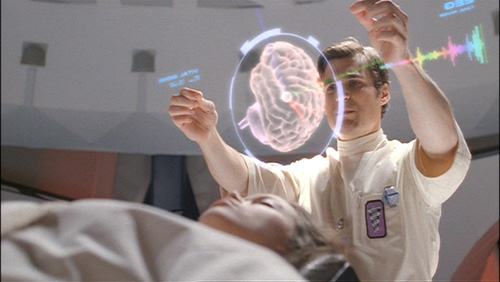

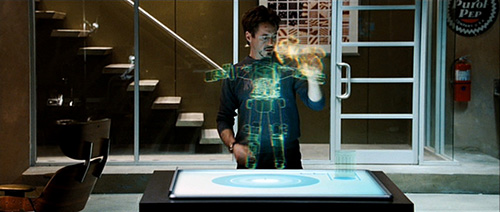

To move an object, you interact with it in much the same way as you would in the physical world: fingers manipulate; palms and arms push. Virtual objects tend to have the resistance and stiffness of their real-world counterparts for these actions. Virtual gravity and momentum may be “turned on” for the duration of these gestures, even when they’re normally absent. Anderton does this in Minority Report as discussed above, and we see it again in Iron Man 2 as Tony moves a projection of his father’s theme park design (Figure 5.10).

Figure 5.10a–b: Iron Man 2 (2010)

3. Turn To Rotate

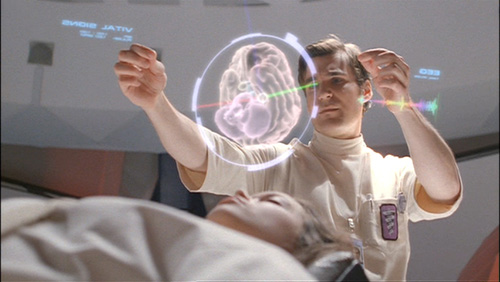

To turn objects, the user also interacts with the virtual thing as one would in the real world. Hands push opposite sides of an object in different directions around an axis and the object rotates. Dr. Simon Tam uses this gesture to examine the volumetric scan of his sister’s brain in an episode of Firefly (Figure 5.11).

Figure 5.11a–b: Firefly, “Ariel” (Episode 9, 2002)

4. Swipe To Dismiss

Dismissing objects involves swiping the hands away from the body, either forcefully or without looking in the direction of the push. In Johnny Mnemonic, Takahashi dismisses the videophone on his desk with an angry backhanded swipe of his hand (Figure 5.12). In Iron Man 2, Tony Stark also dismisses uninteresting designs from his workspace with a forehanded swipe.

Figure 5.12a–c: Johnny Mnemonic (1995)

5. Point Or Touch To Select

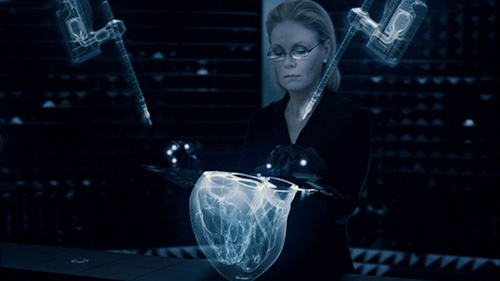

Users indicate options or objects with which they want to work by pointing a fingertip or touching them. District 9 shows the alien Christopher Johnson touching items in a volumetric display to select them (Figure 5.13a). In Chrysalis, Dr. Brügen must touch the organ to select it in her telesurgery interface (Figure 5.13b).

Figure 5.13a–b: District 9 (2009), Chrysalis (2007)

6. Extend The Hand To Shoot

Anyone who played cowboys and Indians as a child will recognize this gesture. To shoot with a gestural interface, one extends the fingers, hand and/or arm toward the target. (Making the pow-pow sound is optional.) Examples of this gesture include Will’s telecombat interface in Lost in Space (see Figure 5.8c), Syndrome’s zero-point energy beam in The Incredibles (Figure 5.14a) and Tony Stark’s repulsor beams in Iron Man (Figure 5.14b).

Figures 5.14a–b: The Incredibles (2004), Iron Man (2008)

7. Pinch And Spread To Scale

Given that there is no physical analogue to this action, its consistency across movies comes from the physical semantics: to make a thing bigger, indicate the opposite edges of the thing and drag the hands apart. Likewise, pinching the fingers together or bringing the hands together shrinks virtual objects. Tony Stark uses both of these gestures when examining models of molecules in Iron Man 2 (Figure 5.15).

Though there are other gestures, the survey revealed no other strong patterns of similarity across properties. This will change if the technology continues to mature in the real world and in sci-fi. More examples of it may reveal a more robust language forming within sci-fi, or reflect conventions emerging in the real world.

Figures 5.15a–b: Iron Man 2 (2010)

Opportunity: Complete The Set Of Gestures Required.

In the real world, users have some fundamental interface controls that movies never show but for which there are natural gestures. An example is volume control. Cupping or covering an ear with a hand is a natural gesture for lowering the volume, but because volume controls are rarely seen in sci-fi, the actual gesture for this control hasn’t been strongly defined or modeled for audiences. The first gestural interfaces to address these controls will have an opportunity to round out the vocabulary for the real world.

Lesson: Deviate Cautiously From The Gestural Vocabulary.

If these seven gestures are already established, it is because they make intuitive sense to different sci-fi makers and/or because the creators are beginning to repeat controls seen in other properties. In either case, the meaning of these gestures is beginning to solidify, and a designer who deviates from them should do so only with good reason or else risk confusing the user.

Direct Manipulation

An important thing to note about these seven gestures is that most are transliterations of physical interactions. This brings us to a discussion of direct manipulation. When used to describe an interface, direct manipulation refers to a user interacting directly with the thing being controlled — that is, with no intermediary input devices or screen controls.

For example, to scroll through a long document in an “indirect” interface, such as the Mac OS, a user might grasp a mouse and move a cursor on the screen to a scroll button. Then, when the cursor is correctly positioned, the user clicks and holds the mouse on the button to scroll the page. This long description seems silly only because it describes something that happens so fast and that computer users have performed for so long that they forget that they once had to learn each of these conventions in turn. But they are conventions, and each step in this complex chain is a little bit of extra work to do.

But to scroll a long document in a direct interface such as the iPad, for example, users put their fingers on the “page” and push up or down. There is no mouse, no cursor and no scroll button. In total, scrolling with the gesture takes less physical and cognitive work. The main promise of these interfaces is that they are easier to learn and use. But because they require sophisticated and expensive technologies, they haven’t been widely available until the past few years.

In sci-fi, gestural interfaces and direct manipulation strategies are tightly coupled. That is, it’s rare to see a gestural interface that isn’t direct manipulation. Tony Stark wants to move the volumetric projection of his father’s park, so he sticks his hands under it, lifts it and walks it to its new position in his lab. In Firefly, when Dr. Tam wants to turn the projection of his sister’s brain, he grabs the “plane” that it’s resting on and pushes one corner and pulls the other as if it were a real thing. Minority Report is a rare but understandable exception because the objects Anderton manipulates are video clips, and video is a more abstract medium.

This coupling isn’t a given. It’s conceptually possible to run Microsoft Windows 7 entirely with gestures, and it is not a direct interface. But the fact that gestural interfaces erase the intermediaries on the physical side of things fits well with erasing the intermediaries on the virtual side of things, too. So, gesture is often direct. But this coupling doesn’t work for every need a user has. As we’ve seen above, direct manipulation does work for gestures that involve physical actions that correspond closely in the real world. But, moving, scaling and rotating aren’t the only things one might want to do with virtual objects. What about more abstract control?

As we would expect, this is where gestural interfaces need additional support. Abstractions by definition don’t have easy physical analogues, and so they require some other solution. As seen in the survey, one solution is to add a layer of graphical user interface (GUI), as we see when Anderton needs to scrub back and forth over a particular segment of video to understand what he’s seeing, or when Tony Stark drags a part of the Iron Man exosuit design to a volumetric trash can (Figure 5.16). These elements are controlled gesturally, but they are not direct manipulation.

Figure: 5.16a–c Minority Report (2002), Iron Man (2008)

Invoking and selecting from among a large set of these GUI tools can become quite complicated and place a DOS-like burden on memory. Extrapolating this chain of needs might very well lead to a complete GUI to interact with any fully featured gestural interfaces, unlike the clean, sparse gestural interfaces that sci-fi likes to present. The other solution seen in the survey for handling these abstractions is the use of another channel altogether: voice.

In one scene from Iron Man 2, Tony says to the computer, “JARVIS, can you kindly vacuform a digital wireframe? I need a manipulable projection.” Immediately JARVIS begins the scan. Such a command would be much more complex to issue gesturally. Language handles abstractions very well, and humans are pretty good at using language, so this makes language a strong choice.

Other channels might also be employed: GUI, finger positions and combinations, expressions, breath, gaze and blink, and even brain interfaces that read intention and brainwave patterns. Any of these might conceptually work but may not take advantage of the one human medium especially evolved to handle abstraction — language.

Lesson: Use Gesture For Simple, Physical Manipulations, And Use Language For Abstractions.

Gestural interfaces are engaging and quick for interacting in “physical” ways, but outside of a core set of manipulations, gestures are complicated, inefficient and difficult to remember. For less concrete abstractions, designers should offer some alternative means, ideally linguistic input.

Gestural Interfaces: An Emerging Language

Gestural interfaces have enjoyed a great deal of commercial success over the last several years with the popularity of gaming platforms such as Nintendo’s Wii and Microsoft’s Kinect, as well as with gestural touch devices like Apple’s iPhone and iPad. The term “natural user interface” has even been bandied about as a way to try to describe these. But the examples from sci-fi have shown us that gesturing is “natural” for only a small subset of possible actions on the computer. More complex actions require additional layers of other types of interfaces.

Gestural interfaces are highly cinemagenic, rich with action and graphical possibilities. Additionally, they fit the stories of remote interactions that are becoming more and more relevant in the real world as remote technologies proliferate. So, despite their limitations, we can expect sci-fi makers to continue to include gestural interfaces in their stories for some time, which will help to drive the adoption and evolution of these systems in the real world.

This post is an excerpt of Make It So: Interaction Design Lessons From Science Fiction by Nathan Shedroff and Christopher Noessel (Rosenfeld Media, 2012). You can read more analysis on the book’s website.

Further Reading

- To Use Or Not To Use: Touch Gesture Controls For Mobile Interfaces

- In-App Gestures And Mobile App User Experience

- Browser Input Events: Can We Do Better Than The Click?

- To Use Or Not To Use: Touch Gesture Controls For Mobile Interfaces

- Retro Futurism At Its Best: Designs and Tutorials

Flexible CMS. Headless & API 1st

Flexible CMS. Headless & API 1st